Get BLR80 on Meta/Steam now!

Making of BLR80 - devblog

Intro

Hello. My name is Thomas Kuriakose.

And I am the guy who developed BLR80 - a sci-fi, Virtual Reality game for the Meta Quest and Rift.

In this post, I talk about how I made the game.

The year was 2020.

I had quit my day job, and was preparing to get my feet wet with Unreal Engine. I was going to make BLR80, but I wasn't sure if I could do that with UE4.

A little bit of background, I have written games and other interactive 3D applications, on my own game engines, for DOS, Windows, Mac OS and iOS in the past. And I had been toying around with the Oculus SDK and the DK1 since 2013. So I was no stranger to real-time computer graphics and engine programming

Though, in 2020, I was sure about three things.

-1. I was going to make BLR80.

-2. I was NOT going to write another engine. (Why? Because, it takes a lot of time. And I didn't want to repeat io and renderB)

-3. I was NOT going to muck around in Unreal C++.

The last point meant that I had to either do it in Blueprints or Unity C#. But I decided to try out UE4 blueprints first.

And to get my feet wet, I decided to port renderB to UE4, using only blueprints. That turned out to be a success. You can check it out here. But that was for the PC.

I still wasn't sure about Blueprint performance on mobile class hardware, such as the Quest.

I ran a few tests with proxies to stress-test the system. The results were promising, but it's one thing to have your little ideal test case run just fine and another thing altogether to have a full-blown game with entire subsystems (resource handling, ai, audio, gameplay, environment elements) running in contention in a game loop.

Nevertheless, I was hopeful and the first task at hand in creating a neon-infused, cyberpunk-ish Indian city, complete with crowds, vehicles and villainous robots was, creating the crowd system.

And the whole damn thing had to run in VR.

On the meta quest.

At 72fps.

This was the goal.

00. Crowd

Screenshots

Since the game takes place in a city, a dense dynamic crowd was important to me.

I love cities, sky scraping buildings, the hustle and bustle. A hundred stories in every direction. And the lights.

I wanted to capture ALL of that in the game.

So.. I decided to start with the crowd.

For a moment, I toyed with the idea of creating a crowd simulator. People milling about, interacting with other humans, crossing the street, etc.. This seemed like an exciting thing to work on. Especially during the pandemic, to experience a crowded, eventful city while being isolated in real-life, seemed worthwhile.

But, if there is anything I have learnt over many personal projects, it is the need to not go down trippy experiments.

Focus is a 100 "No"'s.

So, I ended up putting all human meshes, on rails.

Also, to save on computation, I had decided that humans would not have animations and therefore no limbs. This also helped in keeping the tri-count low. Also, LODs were used to optimize mesh rendering.

However, with all of that, the crowd still didn't look dense. Adding more human meshes to the point of visual density that was satisfying, was compute intensive.

In the end, I stuck with humans on rails/splines. All human meshes would be assigned a random offset, speed and direction at load-time. Human meshes on entire splines could be turned on/off as per the players location in game to help performance.

Human-mesh-deflection from the player was also added in to provide a sense of presence in the game.

On the main spline, a human-mesh-looping-around-the-player trick was deployed. This made it possible to always have a visually dense crowd around the player, without increasing the mesh count.

The human and vehicle meshes were bought on Sketchfab and altered to use in-game. Visit the credits page to find out the artists whose works have been used in the game.

In total, there were about 576 human instances created from 12 unique human meshes.

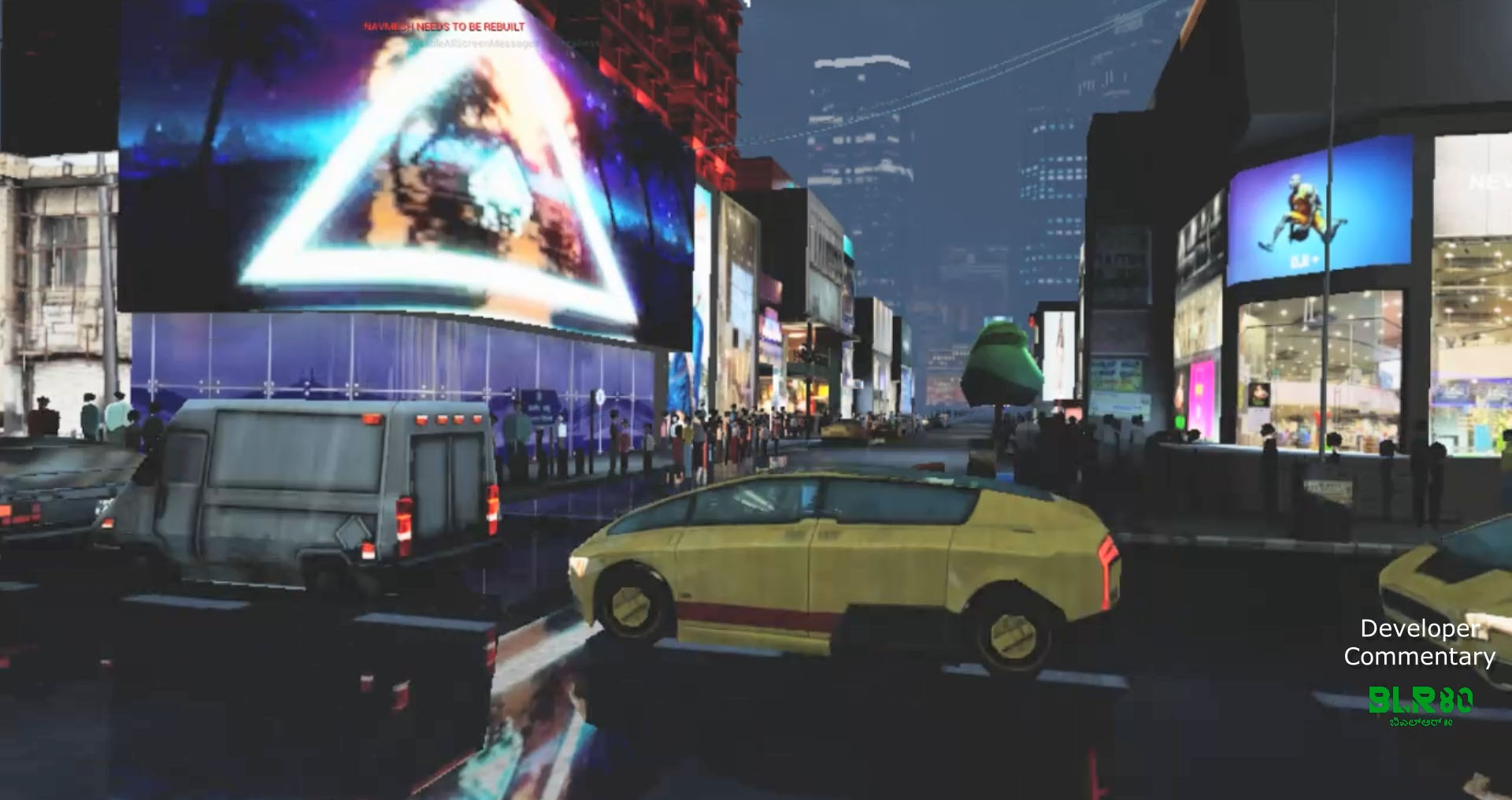

01. City

Screenshots

The game is set in M.G. road, Bangalore.

And to capture its essence, a lot of reference photographs were taken. And due to the covid pandemic, it became possible to take relatively crowd-free photographs of the city.

Once enough reference photographs were collected, I set out to model the major buildings in a brute-force, straight-forward manner. But this was turning out to be inefficient time-wise, and the visuals were unsatisfatory.

So camera-matching techniques were adopted to quickly model, as well as capture texture data from the images. This turned out to provide visually, exactly what was required, while also being quick.

Filling the city block with buildings was far more challening than I thought. I needed more buildings than what the actual location had to offer, so I went about the city for more references. Once I had references and the models, effort was needed in removing and altering real-world logos and brands. Photoshop had some great tools to help with that.

Coming up with fictional buildings was harder still, for me.

The city in the game is a fusion of fictional and actual buildings from various parts of bangalore.

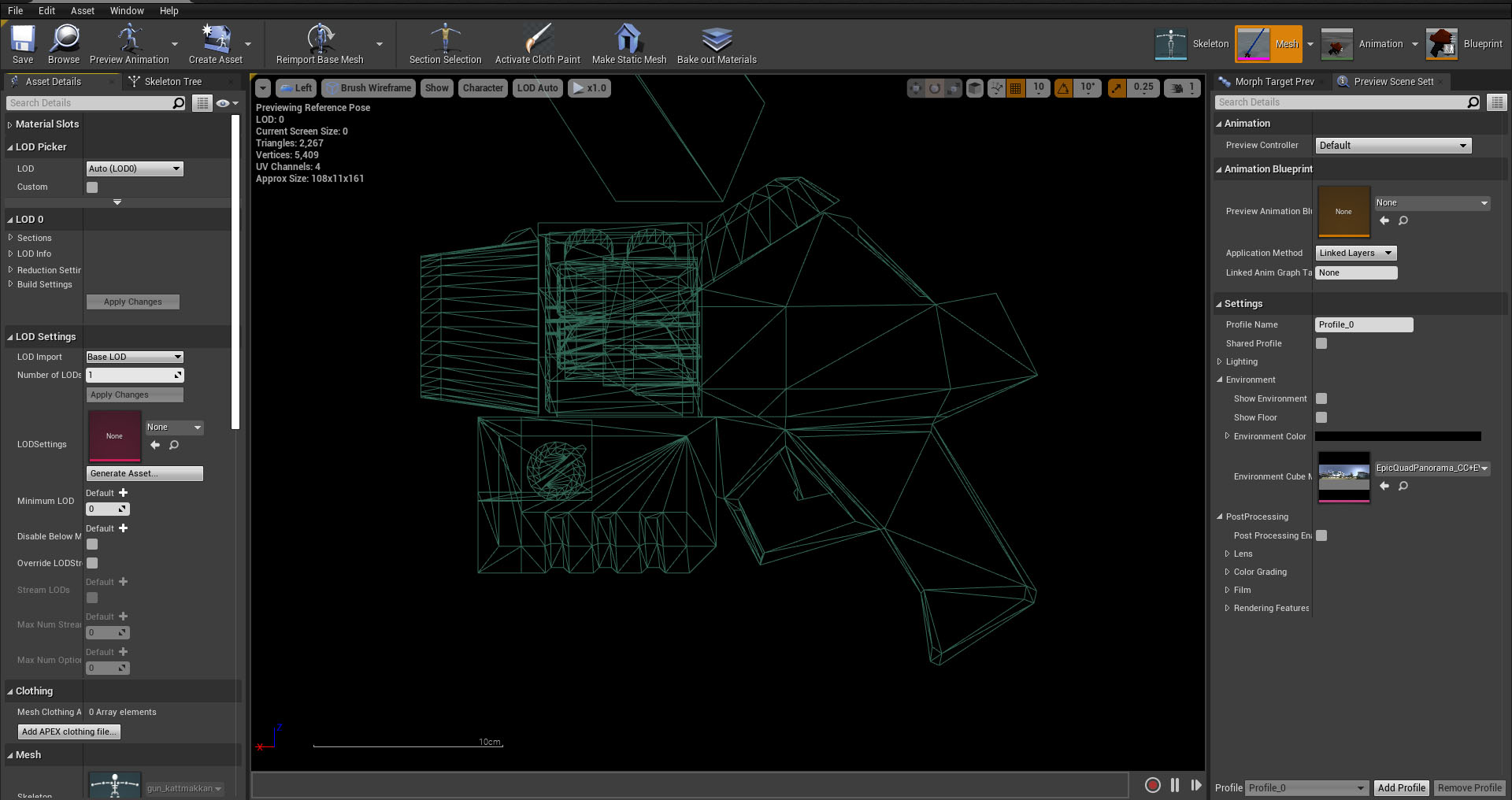

02. Weapons

Screenshots

I am from the Quake school (sorry, Epic) of gaming. The weapons in my games reflect that.

In fact, Quake 2 was the inspiration for the nail gun in BLR80. I liked the rotary mechanism on the weapon and implemented the animation through code. All other weapon animations were animated in 3DS Max and brought in.

Particle effects were a straight forward implementation using Unreal Niagara.

For the rail gun's undulation effect, morph targets were created in 3ds max and imported into Unreal where the effect was manipulated in real-time. Similarly, morph targets were created for the flame thrower particles, since alpha rendering on the Quest would cause a performance drop.

All HUD readouts were implemented using Unreal UMG widgets.

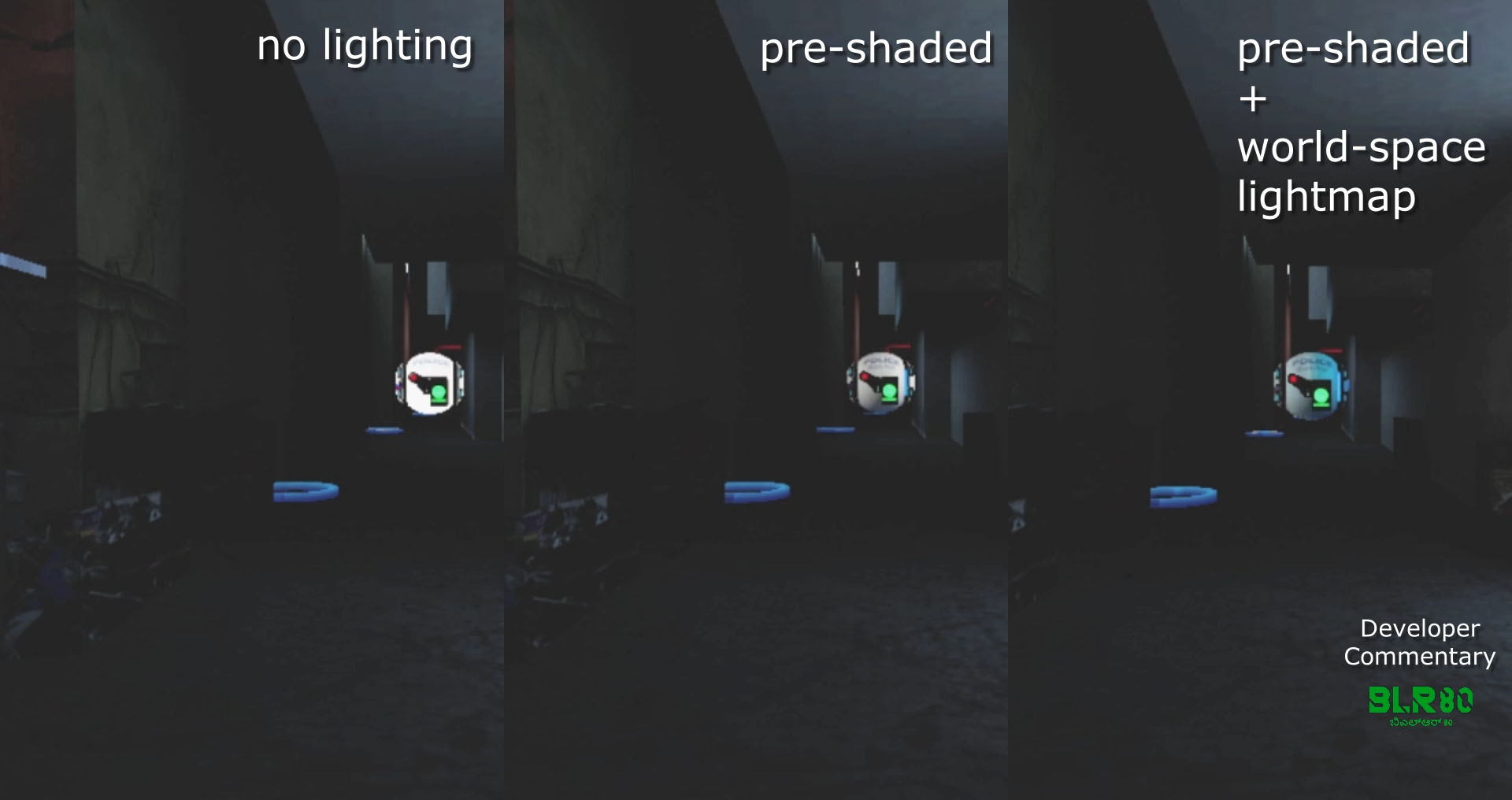

03. Lighting

Screenshots

All static assets were lit offline using Vray, with the lighting baked into the diffuse texture.

All dynamic assets were pre-lit to provide shading and then dynamically lit in-game with a single world-space lightmap.

What is a World-space Lightmap? you ask.

Well.. its a lightmap that is projected on to the world using world-space co-ordinates rather than UV coordinates.

04. Glow

Screenshots

All the various glows, strobing, streaking and pulsating effects were achieved through UV animation and a control texture.

This made it easy to rapidly integrate new effects without additional programming.

And since this was all integrated into the main shader, no material changes or additional draw calls were required.

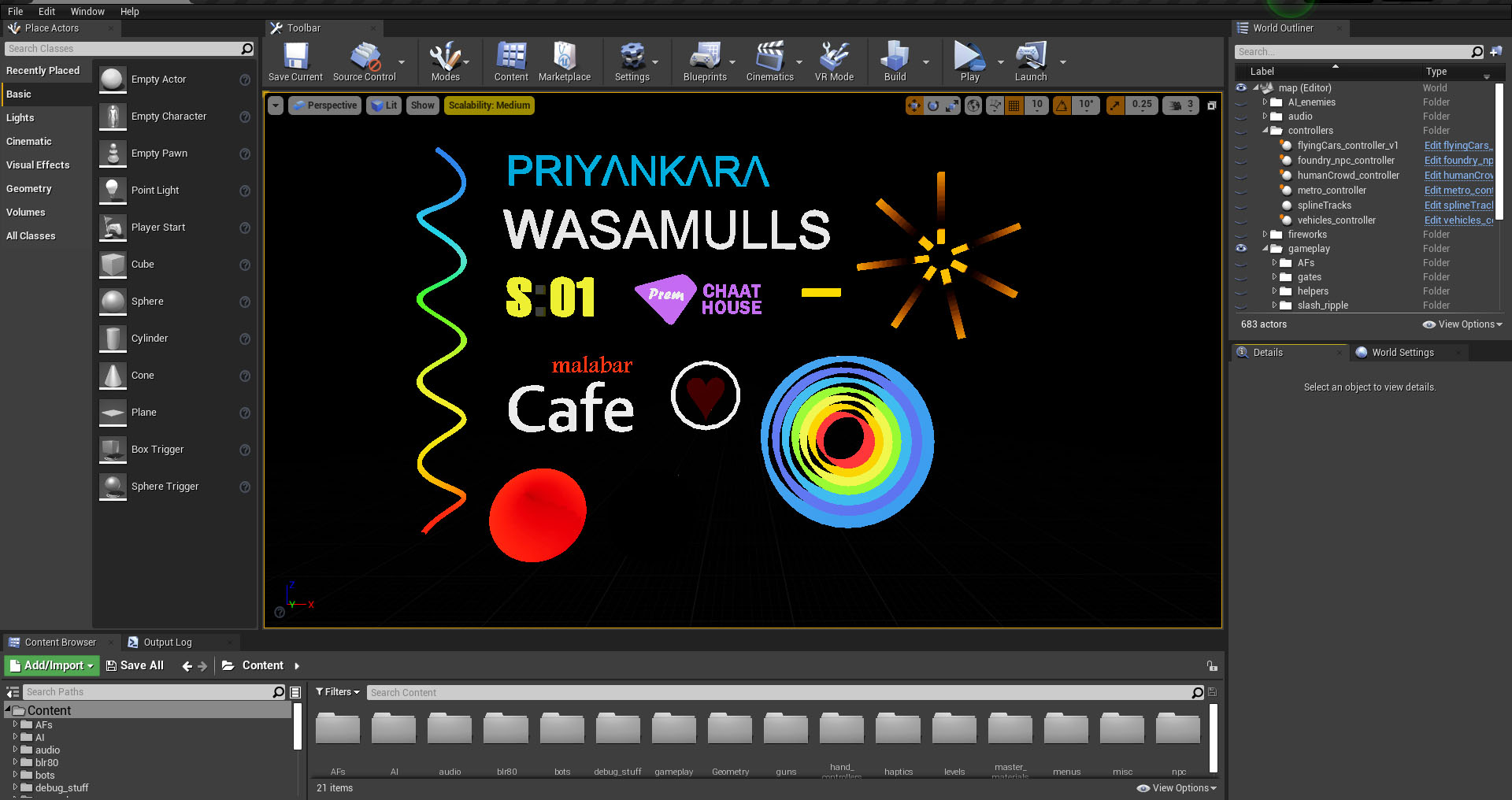

05. Jumbotrons

Screenshots

All the videos presented in the game is just one large 8K texture.

Offline, frames were extracted from video clips and then tiled out in photoshop, with a custom script, which was then used as a texture in Unreal and played out as a flipbook animation in real-time.

Each video has 256 frames, which are cycled through, in a loop.

The advantage of using a single texture over separate actual video files was the support for arbitrary display sizes, mipmaps and eliminating any need for run-time decoding of the video stream, plus also reducing material parameter switches.

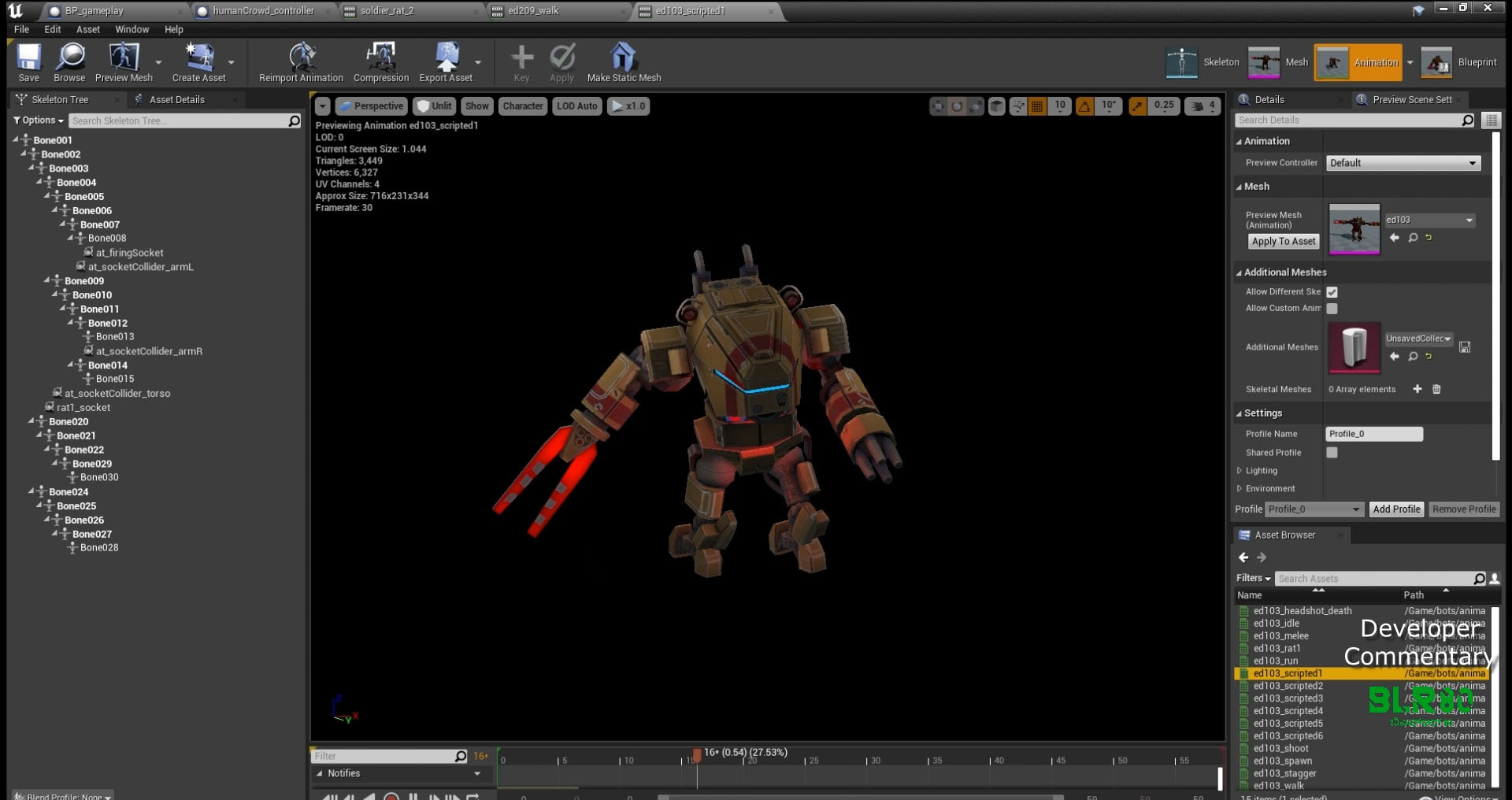

06. Animations

Screenshots

All animations were made with 3ds max and brought into Unreal Engine.

07. OST/ VO/ Audio effects

Screenshots

The sound track for the game was performed and sung by rZee.

The track was provided with and without vocals, so that I could edit and mix as I chose, for the needs of the game.

The audio track was the first component to get done with and was completed in about 3 months, very early on in the games development timeline.

The voice overs in the game were performed by me. The languages featured are english, kannada and malayalam.

Why malayalam? you ask

Except for the audio track, all audio capture was done on my phone and edited and mixed using Audacity.

Audio effects too are a combination of various stock and custom audio sounds.

The city audioscape is actual audio captured on location at both mg road and other parts of bangalore and then layered and mixed with other ambient sounds.

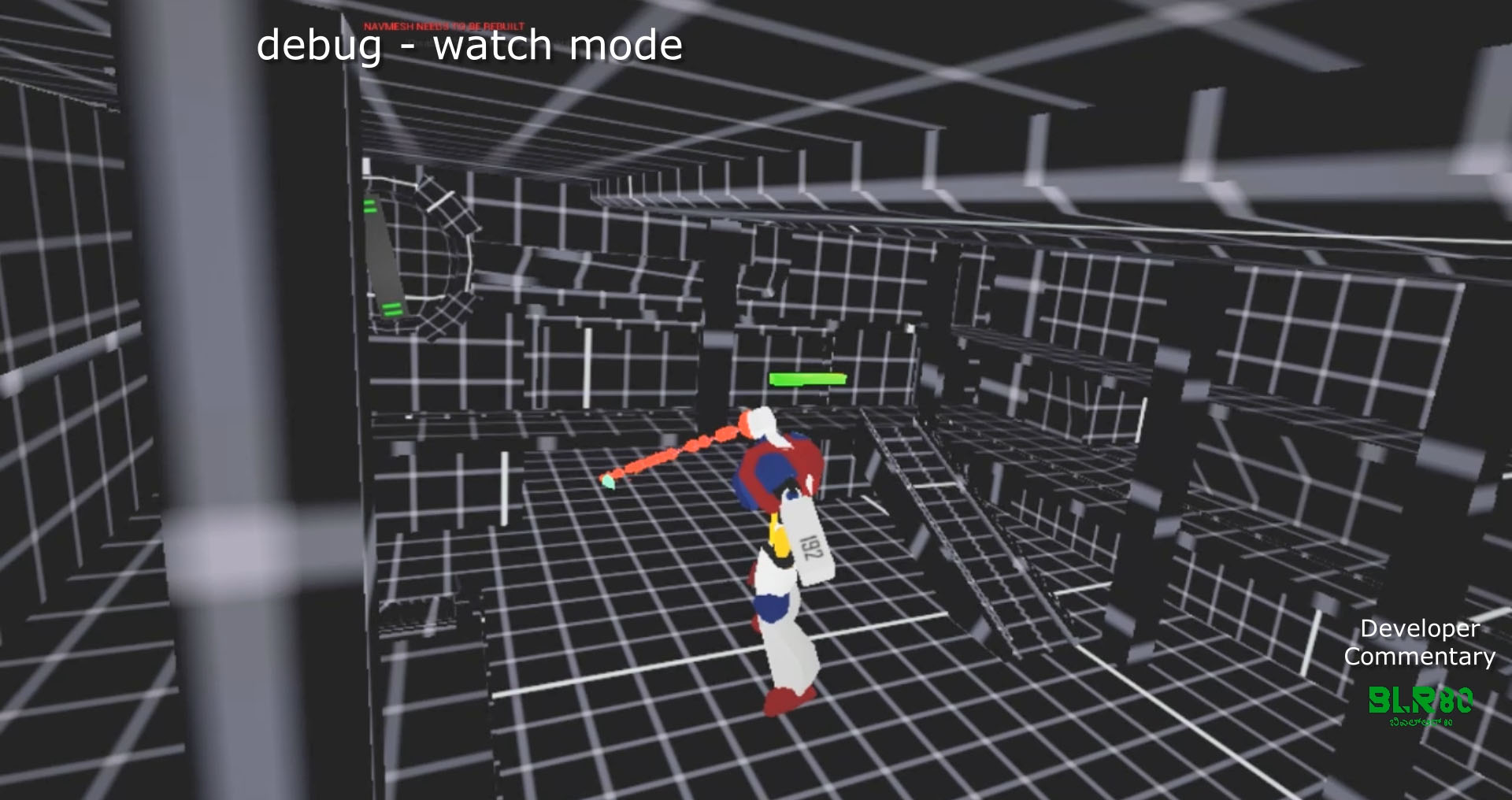

08. AI

Screenshots

All programming for BLR80 was done in blueprints, even the bot AI code.

The AI in BLR80 is a simple hierarchical finite state machine. For pathfinding and navigation, the built-in AI controller system was used.

The bots in BLR80 come in different flavours, there are the stealth types and the aggressive in-your-face types. The stealth types advance by sneaking, while the aggressive types, make a beeline towards you, the player.

09. Technology

Screenshots

BLR80 is powered by Unreal Engine 4 and was developed entirely with Blueprints.

I love Unreal and without it I would not have been able to release BLR80 anytime in 2022.

10. Conclusion

Work on BLR80 began on July 13th, 2020 and was done with, by September 2022.

3x the time I had originally thought it would take.

It runs on the Quest, Quest2 and Rift and for the most part holds up at 72Hz.

It was NOT all fun and games, making it.

Trudging on for weeks on end was the norm, and then there would be a brief moment of joy - when the various parts came together and interconnected well.

This cycle would continue until the modeling, animations, texturing, gameplay, level design, AI, story, voice-overs, audio-editing, audio effects, visual effects, website design, trailers, teasers, marketing shots, programming were all done.

The game drew inspiration from the movies and games that I grew up on.

And I tried to create the world I'd like to see in VR. I am happy with what I have made, and I imagine in the hands of a better artist, it would've turned out a lot better.

I like BLR80. It is a game I would buy.

Get BLR80 on Meta/Steam now!